Hinton’s Biological Insight

Geoffrey Hinton, the father of deep learning, said a few things at the ReWork Deep Learning Summit in Toronto last week. Hinton often looks to biology as a source for inspiration. I’ll share and expand in this post.

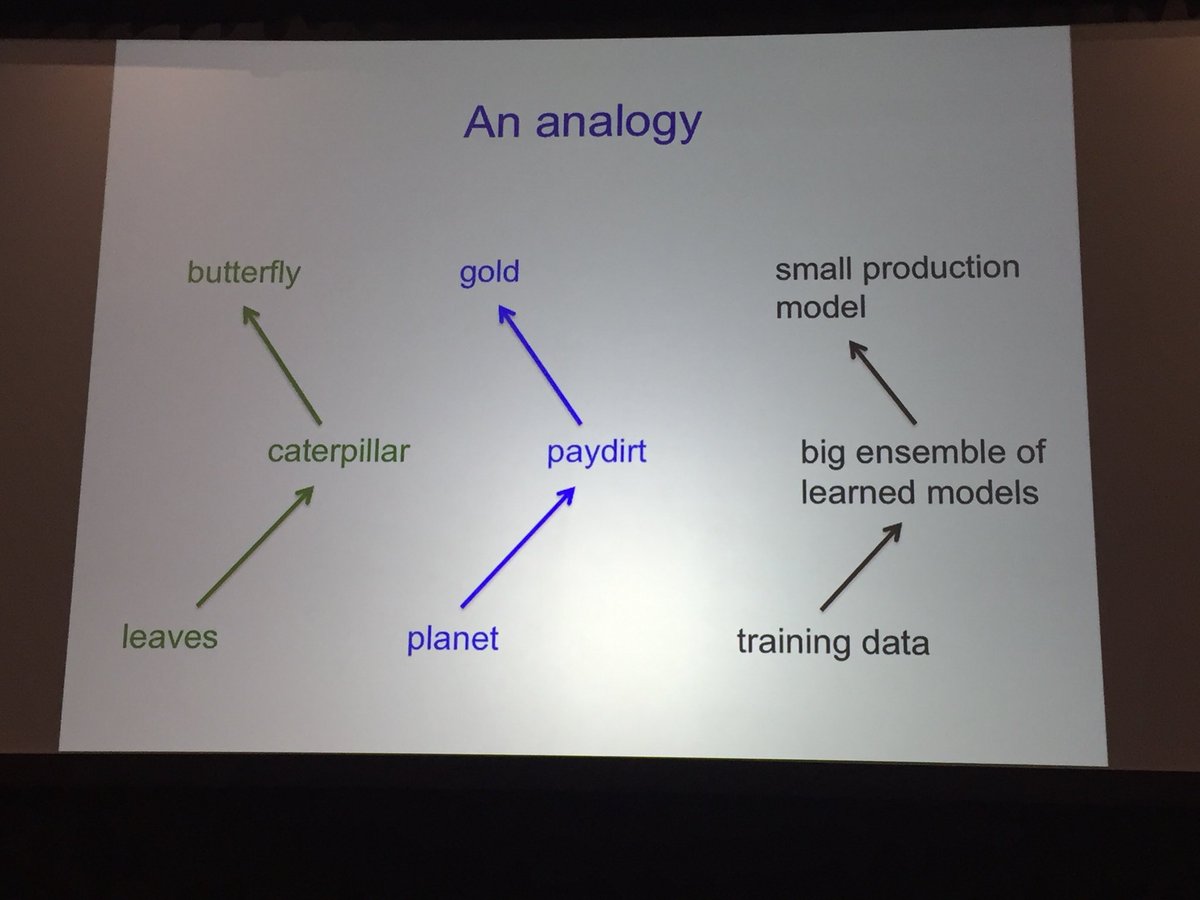

Hinton started off with an analogy. A caterpillar is rally a leaf eating machine. It’s optimized to eat leaves. Then it turns itself into goo and becomes something else, a butterfly, to serve a different purpose.

Similarly, the planet has minerals. Humans build an infrastructure to transform earth into paydirt. And then a different set of chemical reactions are applied to paydirt to yield gold, which has some purpose.

This is much the same way that training data is converted into a set of parameters by a big ensemble of learned models, and that knowledge can be transformed into a small production model for a different purpose. This raises the entire question of what is knowledge.

How is knowledge transmitted in human biological systems? Hinton pointed out that our human brains are limited in terms of size by biology, and so we humans invented a bunch of ways to do transfer learning. Typically we have elders, or expert systems, that can teach younger people. Hinton tried a bunch of experiments and arrived at the insight that artificial neural networks (ANN’s) can share parameters, and this had a few expected effects, and a few surprising effects. He stated that humans don’t share parameters. They share something quite different than parameters. And left it to the audience to think about what we might share.

I have a few thoughts.

So what’s the problem here?

Ensembles of huge neural networks are expensive, but they work. They take a lot of energy to run. But they’re very effective at learning things, like how to read, hear, or speak. They learn specificity very well. But they don’t learn the general very well.

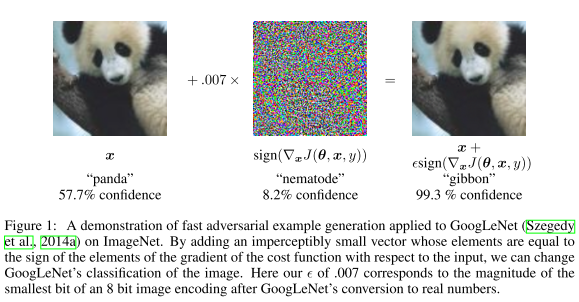

In the image below, a machine may predict panda, and then when something an image of a roundworm is added to the picture, the machine becomes very sure that it’s looking at a gibbon.

A panda, of course, doesn’t really look like a gibbon.

A lot effort is going into trying to solve this generalization problem (robustness) by making different machines endure different environments, and having them share knowledge. Much of that work has been focused on sharing pieces of mathematical knowledge. And, it’s possible that an ensemble of smaller machines may be more energy efficient than a single massive one. At least, there’s some intuition about this.

What are a few other ways that knowledge is shared?

Humans tell stories. Many animals emit chemicals. What else might a machine emit to help other machines learn? There are many ideas that can be applied from nature.

There’s some good intuition there.